The world of artificial intelligence is growing at an unprecedented pace, and with it comes the need for comprehensive benchmarking tools that can provide insights into the performance of various inference engines on different hardware platforms. The UL Procyon AI Inference Benchmark for Windows is an exciting addition to our lab. Designed for technology professionals, this benchmark will undoubtedly revolutionize how we analyze and present hardware performance data.

The world of artificial intelligence is growing at an unprecedented pace, and with it comes the need for comprehensive benchmarking tools that can provide insights into the performance of various inference engines on different hardware platforms. The UL Procyon AI Inference Benchmark for Windows is an exciting addition to our lab. Designed for technology professionals, this benchmark will undoubtedly revolutionize how we analyze and present hardware performance data.

UL Procyon AI Inference Benchmark

The UL Procyon AI Inference Benchmark for Windows is a powerful tool specifically designed for hardware enthusiasts and professionals evaluating the performance of various AI inference engines on disparate hardware within a Windows environment.

With this benchmark tool in our lab, we can provide our readers with insights and benchmark results to assist in making data-driven decisions when choosing an engine that delivers optimal performance on their specific hardware configurations.

Featuring an array of AI inference engines from top-tier vendors, the UL Procyon AI Inference Benchmark caters to a broad spectrum of hardware setups and requirements. The benchmark score provides a convenient and standardized summary of on-device inferencing performance. This enables us to compare and contrast different hardware setups in real-world situations without requiring in-house solutions.

In the world of hardware reviews, the UL Procyon AI Inference Benchmark for Windows is a game-changer. By streamlining the process of measuring AI performance, this benchmark empowers reviewers and users alike to make informed decisions when selecting and optimizing hardware for AI-driven applications. The benchmark’s focus on practical performance evaluation ensures that hardware enthusiasts can truly understand the capabilities of their systems and make the most of their AI projects.

Key Features

- Tests based on common machine-vision tasks using state-of-the-art neural networks

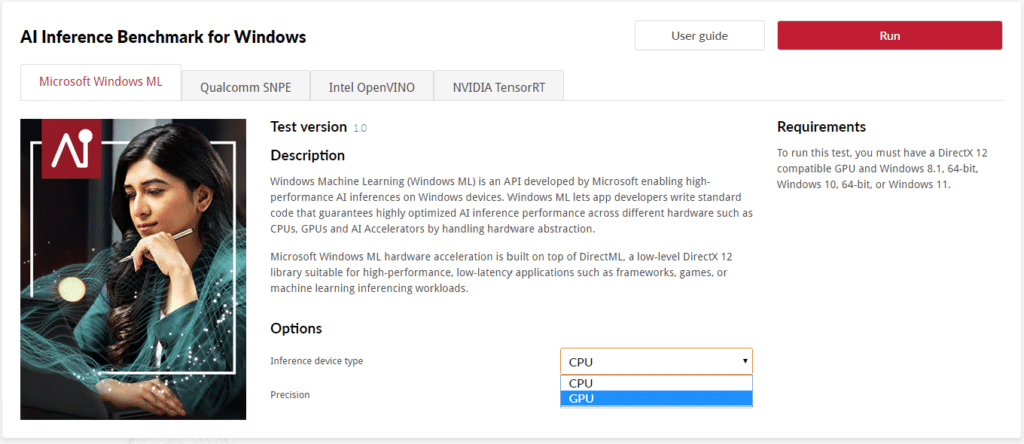

- Measure inference performance using the CPU, GPU, or dedicated AI accelerators

- Benchmark with NVIDIA TensorRT, Intel OpenVINO, Qualcomm SNPE, and Microsoft Windows ML

- Verify inference engine implementation and compatibility

- Optimize drivers for hardware accelerators

- Compare float and integer-optimized model performance

- Simple to set up and use via the UL Procyon application or command-line

UL Procyon AI Inference Benchmark – Neural Network Models

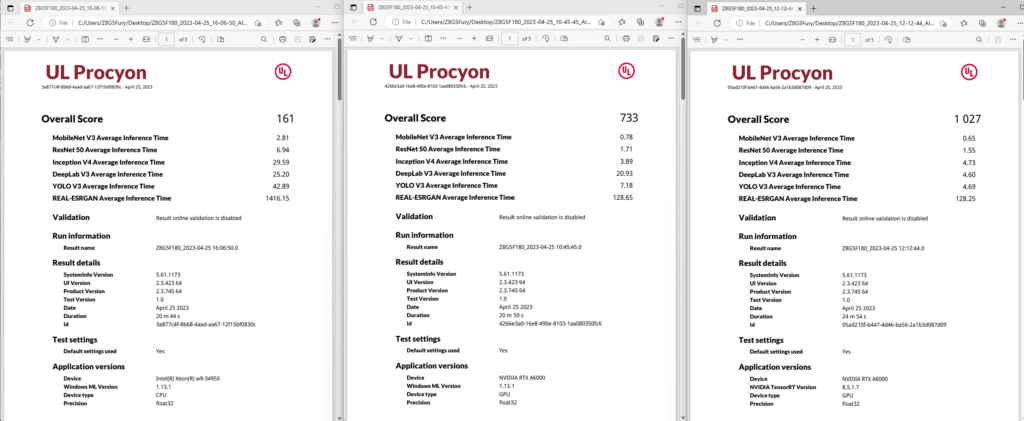

The UL Procyon AI Inference Benchmark incorporates a diverse array of neural network models, including MobileNet V3, Inception V4, YOLO V3, DeepLab V3, Real-ESRGAN, and ResNet 50. These models cover various tasks such as image classification, object detection, semantic image segmentation, and super-resolution image reconstruction. Including both float- and integer-optimized versions of each model allows for easy comparison between different models.

- MobileNet V3 is a compact visual recognition model specifically designed for mobile devices. It excels at image classification tasks, identifying the main subject of an image by outputting a list of probabilities for the content in the image.

- Inception V4 is a state-of-the-art model for image classification tasks. It is a broader and deeper model compared to MobileNet, designed for higher accuracy. Like MobileNet, it identifies the subject of an image and outputs a list of probabilities for the detected content.

- YOLO V3, which stands for You Only Look Once, is an object detection model. Its primary goal is to identify the location of objects in an image. YOLO V3 generates bounding boxes around detected objects and provides probabilities for the confidence of each detection.

- DeepLab V3 is an image segmentation model that focuses on clustering pixels in an image that belongs to the same object class. This semantic image segmentation technique labels each region of the image according to the object class it belongs to.

- Real-ESRGAN is a super-resolution model trained on synthetic data. It specializes in increasing the resolution of an image by reconstructing a higher-resolution image from a lower-resolution counterpart. In the benchmark, it upscales a 250×250 image to a 1000×1000 image.

- ResNet 50 is an image classification model that introduced the novel concept of residual blocks, enabling the training of deeper neural networks than previously possible. It identifies the subject of an image and outputs a list of probabilities for the detected content.

To facilitate easy comparison between different types of models, the UL Procyon AI Inference Benchmark includes both float- and integer-optimized versions of each model. This allows users to evaluate and compare the performance of each model on compatible hardware, ensuring a comprehensive understanding of their system’s capabilities.

This was run on our HP Z8 Fury G5 with quad NVIDIA A6000 GPUs. It won’t run Crysis, but it can run Crysis 2 Z8G5F180_2023-04-25_12-12-44_AITensorRT

Future Implications

We’re looking forward to the positive impact the UL Procyon AI Inference Benchmark will have on StorageReview.com’s presentation of new GPUs and CPUs in the coming years. Considering UL’s solid industry expertise in the benchmarking space, this benchmark will assist our team in assessing and presenting the general AI performance of various inference engine implementations on various hardware more efficiently.

Moreover, the detailed metrics provided by the benchmark, such as inference times, will enable a deeper and more granular understanding of new hardware capabilities and evolution. The value of standardization that this benchmark brings to the table also ensures consistency in comparing AI performance across different hardware configurations internally and among our friends in the industry.

Closing thoughts

The UL Procyon AI Inference Benchmark for Windows is a remarkable new tool that promises to be a game-changer in the evaluation and presentation of hardware performance data. With a host of features and an extensive range of neural network models, this benchmark will undoubtedly serve as an invaluable asset for technology professionals, providing valuable data to make well-informed decisions and optimize hardware selection for AI-based applications.

As we integrate this benchmark into our lab, we are thrilled to explore the many ways it will enhance our analysis and presentation of cutting-edge CPUs, GPUs, and servers in the future. This will get us closer to looking at key hardware components in their natural environment, allowing us to deliver more “solutions” results to the industry.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | Discord | RSS Feed